Faculty that teach in the undergraduate computer science major that is offered both in the McCormick School of Engineering and the Weinberg College of Arts and Sciences are finding their courses more popular than ever. Student enrollments are exploding across the board as undergraduates are demanding basic computer science skills that have become a prerequisite for a growing number of careers in the digital age. While it’s certainly encouraging to see a groundswell of student interest in this in-demand field, some faculty and staff are wondering how they will be able to keep up with the demand. Jason Hartline, Associate Professor of Electrical Engineering & Computer Science, tackled this problem head on by finding a way to leverage student engagement to address the mismatch between the number of qualified TAs available and student demand.

Increasing course popularity might seem like a fantastic "problem" to have, but Hartline argues that the growth in class size will not be sustainable without some faculty-driven ingenuity.

"Enrollments are exploding across the board in Computer Science but staff is not,” said Hartline. “Core staff lags behind."

His Design & Analysis of Algorithms and CS 101 classes have 90 and 250 students, respectively. With that many students in a class, Hartline is finding that he and his TAs are increasingly fighting an uphill battle to keep up with both the instruction and feedback components of these courses.

Peer Grading and Review

Hartline employed a prototype peer grading and review system in his Design & Analysis of Algorithms and CS 101 classes during the 2016-2017 academic year. With traditional TA grading of assignments, it used to take two-and-a-half or three weeks to get grades and comments back to students, which meant that students would already be on to the next topic before getting feedback. With peer grading, students can get feedback in three days, and a final grade on their assignments in just five days.

Says Hartline of the peer grading: “It’s a win-win-win in terms of all the criteria you care about in teaching: it lightens the teaching load for staff, speeds up the turnaround time for getting students feedback, and provides a learning opportunity through dual acts of reviewing the work of your peer students and communicating your ideas after seeing other student work.”

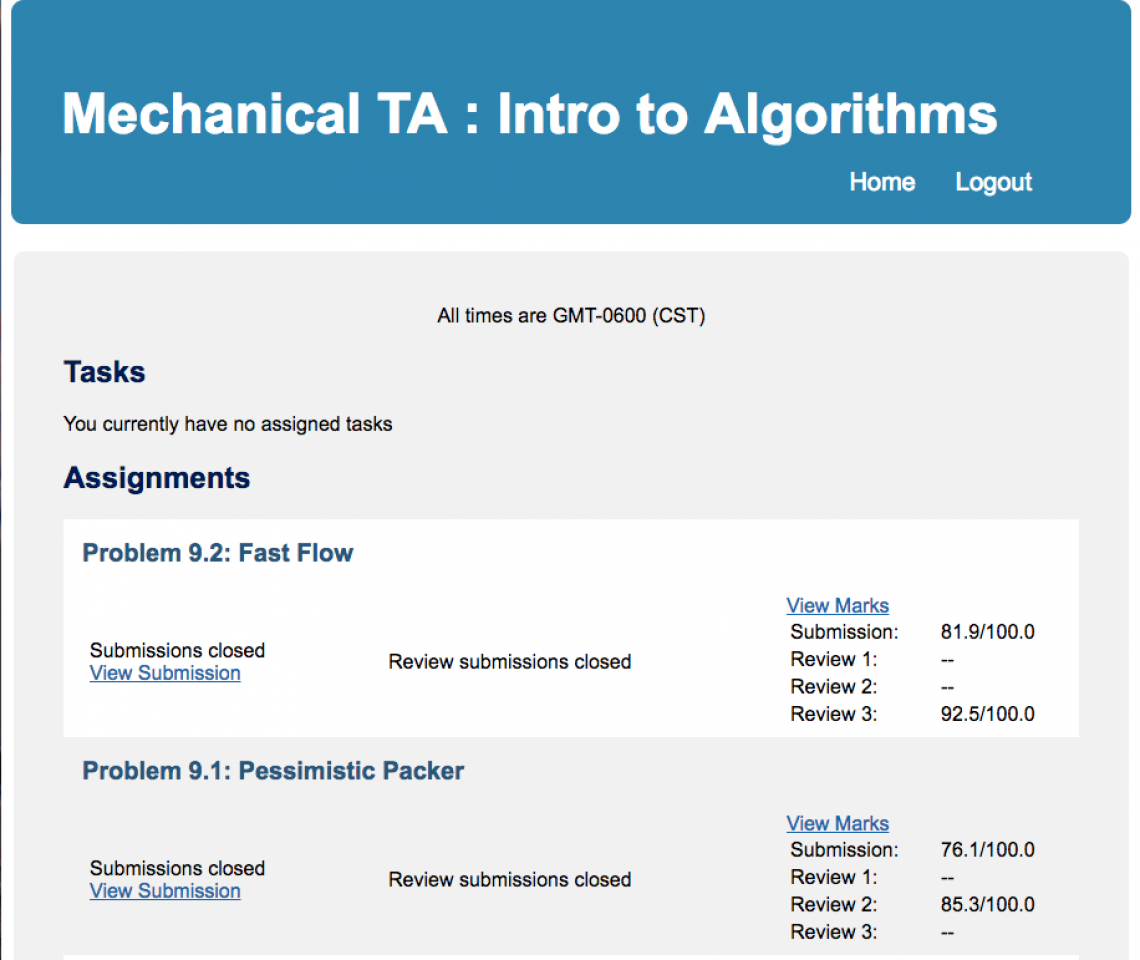

Screenshot of peer grading dashboard

Added Pedagogical Benefit

While the time benefits for instructors are significant, student-driven learning proved to be most exciting. This was especially critical in Design & Analysis of Algorithms, an upper level computer science class. Assignments are presented in a mix of English and a formal mathematical language. Hartline explained that, “for their homework students are describing algorithms and providing a proof of correctness to show why it solves what it does.” Hartline found there was a significant discrepancy between students in terms of quality of the writing in their solutions.

Learning how to do this type of work is challenging for all but the most advanced students.

“The majority of students will write solutions that are hard to understand what they are talking about, much less verify that it is actually an algorithm and show that it is correct.”

Part of the reason why students were turning in solutions like this is because they are unable to tell a good from a bad solution. Peer review gives the students a hands-on way to look at their peers’ work and learn what makes an effective write-up; if you can learn how to judge the good from the bad, you can then apply it to your own work.

Writing down an algorithm homework solution is a communication task that students will need to be successful in their professional lives.

“In the work world you need to communicate with a team of software engineers and clients,” said Hartline. “How can you get good at that task if you only write solutions and never read solutions?”

Student Feedback

In an informal survey after Design & Analysis of Algorithms, Hartline asked if the peer grading process helped students to learn the course material and if they felt it helped them to prepare their own solutions better. The responses confirmed what he was hoping – most students indicated that the peer grading process was beneficial to their own work. However, the benefit was greater for students who were initially struggling with the course material.

“For the students who easily grasp the subject matter, peer grading is extra work, but the students who struggle are saying that they feel more confident with learning the material and preparing solutions,” said Hartline.

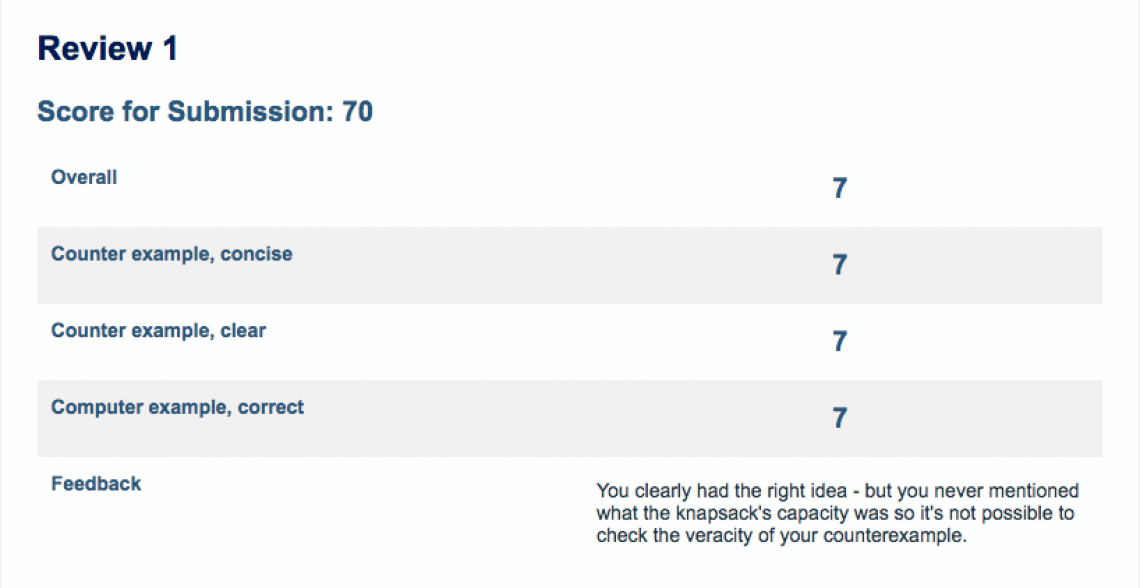

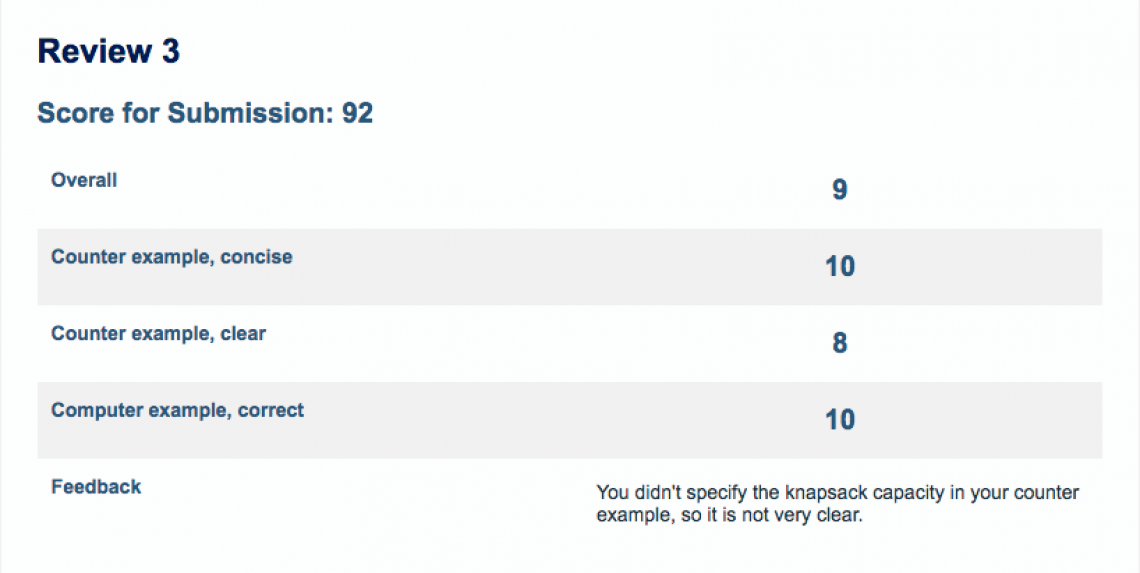

Screenshots of peer grading rubric and feedback

What’s Next?

Prof. Hartline is currently working with Northwestern IT to develop a second-generation peer assessment app that will plug directly into Canvas, giving students and colleagues the ability to work within a familiar platform.

“Other faculty may have different needs, but I hope that assessing those needs will help us to arrive at the best system. NUIT has been instrumental in helping to bring the platform more broadly to NU and other faculty."

The goals is to develop a 100% anonymous review system, which is important in undergraduate classes. And while Hartline confesses that he doesn't have the means to create a polished, smooth software system on his own, he is confident that his partnership with Northwestern’s developers will make it happen.

Digital Learning will follow up on this story when Prof. Hartline’s app is ready for Canvas integration and for use by other instructors. Stay tuned!