Rick Salisbury is the associate director of educational technology at the Kellogg School of Management. In this guest post, Rick describes the growing use of mixed reality devices in higher education.

At Kellogg, we regularly hold an event called the TechKitchen to highlight tools and technology that faculty can use to enhance their teaching. Past instances of the TechKitchen have included demos of AR and VR technology including the Microsoft HoloLens, the Oculus Rift, and the HTC Vive.

This past quarter I had have several opportunities to introduce these immersive tools to faculty outside of Kellogg—first at TEACHxperts, and most recently at Feinberg's Faculty Academy of Medical Educators TIME lecture series. It’s exciting to see the enthusiasm around innovation in the learning process and to discuss how faculty from different disciplines can incorporate the technology into their course design.

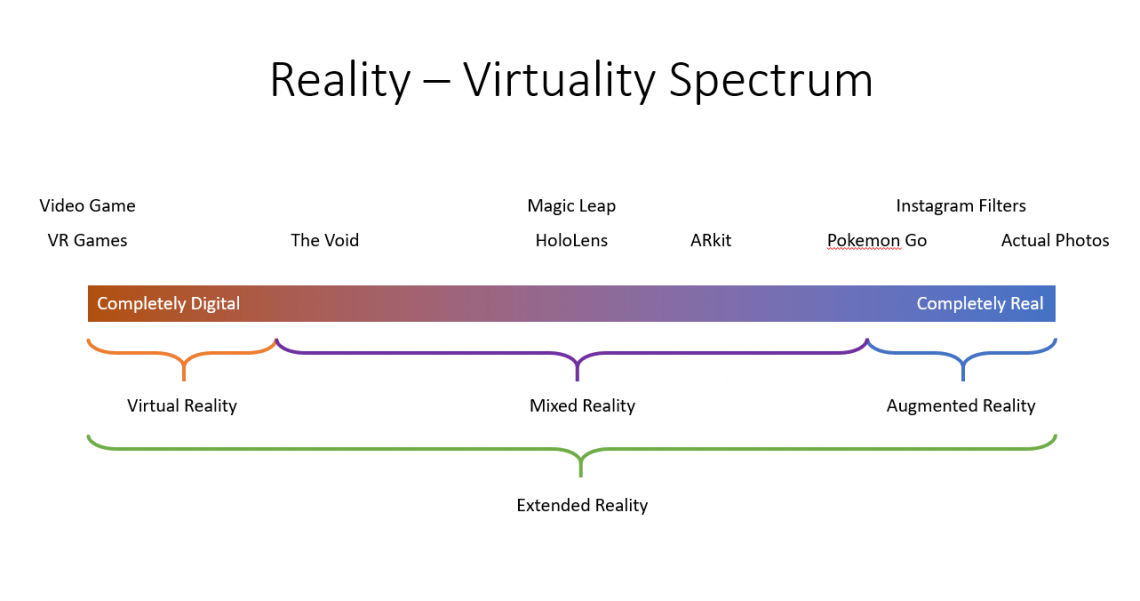

For anyone new to these technologies, it is useful to start by breaking down the differences between AR, VR, and the family of related terms.

Virtual Reality

VR places the participant in a completely computer-generated world. Advanced VR headsets, such as the HTC VIVE and Oculus Rift, create an experience that allows individuals to move around freely in this 3D digital space and feel a true sense of presence in a digital world.One of the titles I've included in our demos is Apollo11 VR. A truly immersive documentary, the Apollo11 VR experience allows participants to accompany the astronaut team on their mission to the moon and relive the historic events of 1969. Using the original communication audio from NASA and played back in real time, users experience first-hand the launch into space.

Augmented Reality

AR utilizes your existing reality and overlays digital objects into this space that are viewed through a mobile phone, tablet, or specialized glasses such as the HoloLens or the Magic Leap One.A fun example of AR is Froggipedia, which was recently named the top iPad app of 2018. Froggipedia places a virtual frog on a real table or other flat physical service. Users can isolate and view the frog's individual anatomical systems.

The Intersection of AR and VR – Mixed Reality

The lines between AR and VR are quickly blurring as new headsets increasingly include outward-facing cameras and AR digital objects are able to detect and react to the physical environment. This has led to the creation of the term MR or mixed reality. Mixed reality anchors virtual objects to the real world by digitally mapping the physical space, thus allowing the virtual and real worlds to interact.In September, the FDA approved the first mixed reality application for surgical use. The OpenSight App takes 3D images created from patient scans and overlays them on top of the patient's body. The digital images adjust with patient movements and assist clinicians with pre-operative planning.

Finally, the whole family of “realities” has been dubbed XR or extended reality, allowing any application to be placed along the reality-virtuality spectrum

Teaming Up

One of limitations with our earlier demos is that we intentionally focused on simple, singular experiences that are easy to reset and rerun. In addition, we usually don’t have the space or equipment to setup multiple connected devices.I fear this can give the impression that VR and AR are solo experiences when in fact many are intended to be collaborative social environments that open up powerful opportunities for exploration, coaching, and mentoring.

One of my favorite examples of this comes from the gaming world. In Star Trek: Bridge Crew, each teammate takes on a unique role with a unique set of responsibilities. Captain, tactical officer, operations officer, and flight control officer all need to communicate and work together in unison to successfully complete each mission.

What’s Next?

While Star Trek: Bridge Crew shows the power of the connected virtual world, it does not mean a team of dedicated gaming developers is needed in order to design custom learning experiences. New platforms and tools are available to facilitate the often daunting content creation process:

- Amazon Web Services has developed project Summerian, a cloud-based platform for building AR and VR applications without any specialized programming or 3D graphics expertise.

- Immersive VR Education, the same company that created the Apollo 11 VR experience, has released ENGAGE, a platform designed to specially for instructors to create and host interactive class sessions.

It is still early days for this technology, but expect these virtual experiences to become a common way to compliment the classroom experiences and improve learner outcomes.